Faster deployments

Back in September, we announced our first fastdowntime deployment. That was a new way to do deployment involving DB changes. This meannt less downtime for you the user, but we were also hoping that it would speed up our development by allowing us to deliver changes more often.

Back in September, we announced our first fastdowntime deployment. That was a new way to do deployment involving DB changes. This meannt less downtime for you the user, but we were also hoping that it would speed up our development by allowing us to deliver changes more often.

How can we evaluate if we sped up development using this change? The most important metric we look at when making this evaluation is cycle time. That’s the time it takes to go from starting to make a change to having this change live in production. So before fastdowntime, our cycle time was about 10 days, and it is now about 9 days. So along the introduction of this new deployment process, we cut 1 day off the average, or a 10% improvement. That’s not bad.

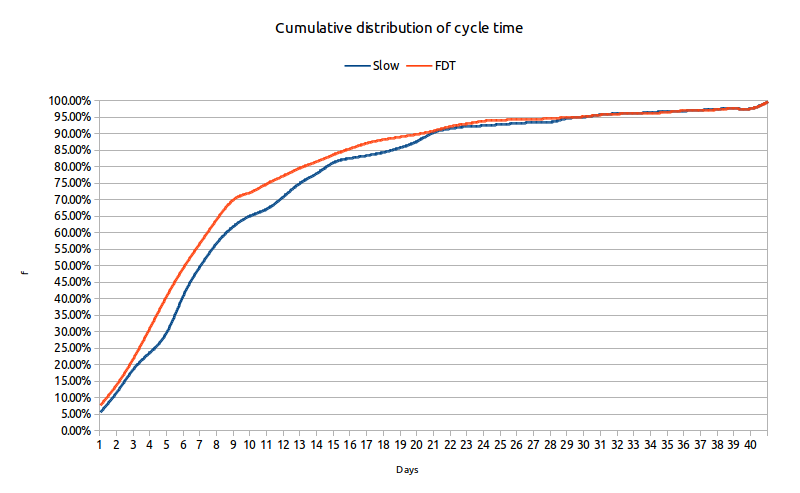

But comparing the cumulative frequency distribution of the cycle time with the old process and the new will give us a better idea of the improvement.

On this chart, the gap between the orange (fastdowntime deployment) and blue (original process) lines shows the improvement to us. We can see that more changes were completed sooner. For example, under the old process about 60% of the changes were completed in less than 9 days whereas about 70% were completed under the same time in the new process. It’s interesting to note that for changes that took less than 4 days to complete or that took more than 3 weeks to complete, there is no practical difference between the two distributions. We can explain that by the fact that things that were fast before are still fast, and things that takes more than 3 weeks would usually have also encountered a deployment point in the past.

That’s looking at the big picture. Looking at the overall cycle time is what gives us confidence that the process as a whole was improved. For example, the gain in deployment could have been lost by increased development time. But the closer picture is more telling.

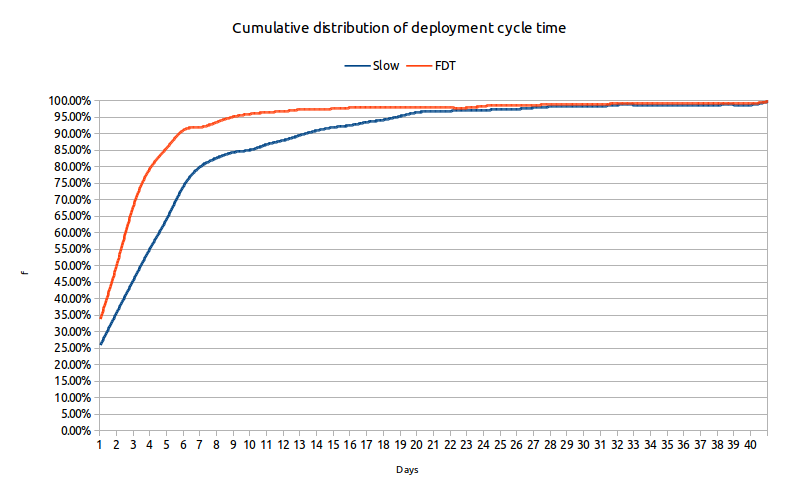

The cycle time charted in this case is from the time a change is ready to be deployed until it’s actually live. It basically excludes the time to code, review, merge and test the changes. In this case, we can see that 95% of the changes had to wait less than 9 days to go live under the new process whereas it would take 19 days previously to get the same ratio. So an

improvement of 10 days! That’s way more nice.

Our next step on improving our cycle time is to parallelize our test suite. This is another major bottleneck in our process. In the best case, it usually takes about half a day between the time a developer submits their branch for merging until it is ready for QA on qastaging. The time in between is passed waiting and running the test suite. It takes about 6 hours to our buildbot to validate a set of revisions. We have a project underway to run the tests in parallel. We hope to reduce the test suite time to under an hour with it. This means that it now would be possible for a developer to merge and QA a change on the same day! With this we expect to shave another day maybe two from the global cycle time.

Unfortunately, there are no easy silver bullets to make a dent in the time it takes to code a change. The only way to be faster there would be to make the Launchpad code base simpler. That’s also under way with the services oriented architecture project. But that will take some time to complete.

Photo by Martin Heigan. Licence: CC BY NC ND 2.0.

February 3rd, 2012 at 3:43 pm

Great news!

Has fast downtime had any effect on quantity of output? i.e. how many changes get released each month, where “change” can be revision or card or whatever makes most sense.

(Also, I can’t help wondering if there’s a better graph for this.)

February 3rd, 2012 at 6:09 pm

Actually, throughput (cards completed by week) declined during the period where fastdowntime was in effect. We went from completing on average 30 cards per week to 25 cards. But I attribute this to the fact that we were down two developers for most of this period. Not only, this means that were less people doing work, it also diminish the availability of the rest of the squads as they had to interview candidates. This drop in throughput affected most the squads that had to replace someone.