Bug Linking Part 1

Published by Curtis Hovey May 29, 2012 in General

The Launchpad team is planning a new feature that will allow you to link bugs to each other and describe their relationship. The general idea is that you can say one bug depends of the fix of another.

We’ll be asking you to help us decide the scope of this feature, so look out for invitations to user research sessions over the coming weeks if you’d like to get involved.

Key Issues

There are issues about the kinds of relationship that should be supported and the types of workflows to use them. Before I describe the workflows and relationships that we have discussed, I think I should first write about the existing bug linking features and hacks.

Affects Project or Package

Launchpad has always recognised that a bug can affect many projects and packages. Launchpad defines a bug as an issue that has a conversation. The information in the conversation is commonly public, but it might be private because it contains security, proprietary or personal information. An issue can affect one or more projects and packages, and each will track its own progress to close the bug.

A bug in a package often originates in the upstream project’s code. I assume that when I see a project and a package listed in the same bug that there is an upstream relationship, but that is not always the case. So some bugs affect multiple packages and projects? How do I interpret this? Maybe one project is a library used by other projects. Maybe the projects and packages cargo-cult broken code that require independent fixes. Maybe the code from one project is included in the source tree of other projects. When I look at the table of affected projects and packages on a bug, I have to guess how they relate. I don’t know if fixes happen in a sequence, or at the same time as each other. Is one project fixed automatically by a fix in another project?

Contributors from all the affected projects contribute to the conversation. The conversation can be hard to read when several projects are discussing their own solution. It is common for everyone from one project to unsubscribe from a bug when the project marks the bug as fixed. This is a case were users are still getting bug mail about an issue that is fixed for them. Maybe there’s more than one issue if there’s more than one conversation happening?

Duplicate

Users commonly report bugs that are duplicates of other bugs. Marking a bug a duplicate of another means that the conversation and progress about an issue is happening somewhere else.

It is common for duplicate bugs to affect different projects from the master bug. Was the duplicate wrong, or maybe Launchpad did not add the duplicate’s affected projects to the master bug’s affected projects?

I can mark your bug to be a duplicate of a bug that you can’t see (because it is private). This is very bad. If I do this, you cannot participate in the conversation to fix the bug, and you don’t know when the bug will be fixed. It is common practice to make the first reported occurrence of a bug the master of all the duplicates, but if the first occurrence has personal information in it, it probably cannot ever be public. Thoughtful users create public versions of bugs and make them the masters of the duplicates so that everyone can participate and be informed.

Links in Comments

Launchpad automatically links text that appears to be a bug number. A user can add a comment about another bug and any user can follow the link to see the bug.

There are many kinds of relationships described in comments which contain linked bugs: “B might be a duplicate of C”, “D must be fixed before E”, “F overlaps with G”, “H invalidates J”, “K is the same area of code as L”, “M is the master issue of N”. I cannot see the status of the linked bugs without opening the bug. Maybe the bug was marked invalid or fixed? Bug comments cannot be edited, so the links in the comments might be historic cruft.

Bug Tags

Bug tags allow projects to classify the themes described and implied in a bug. Projects can use many tags to state how a bug relates to a problem domain, a component, a subsystem, a feature, or an estimate of complexity. Launchpad does not impose an order upon bug tags.

Some projects repurpose/subvert bug tags to describe a relationship between two or more bugs. The tag might describe a relationship and master bug number, such as “dependent-on-123456” and a handful of bugs will use it. Multiple tags might be used on one bug to describe all the directions of the relationships. A search for the tag will show the related bugs and you can see their status, importances, and assignee. The tag becomes obsolete when all all its bugs are closed. There might be more obsolete bug tags then operational ones. Bug numbers embedded in the tags are not updated if one bug becomes the duplicate of another.

Bug Watches

Bug watches sync the status and comments of a bug in a remote bug tracker to Launchpad. Projects can state that the root cause of a problem is in another project, and that project uses another bug tracker. The bug watch is presented in the bug affects table as a separate row so that users can see the remote information with the Launchpad information.

When the bug watch reports the bug is fixed, the other projects can then prepare their fixes based on the watched project’s changes. Since the information is shown in the bug affects table, it has all the same relationship problems previously described. I do not know if I need to get the latest release from the watch project, or create patch, or do nothing. Launchpad interleaves the comments from the remote bug tracker with the Launchpad bug comments, which means there is more than one conversation happening. The UI does distinguish between the comments, but it is not always clear that there are two conversations in the UI and in email.

Next

Workflows that that use related bugs.

How bug information types work with privacy

Published by Curtis Hovey May 23, 2012 in General

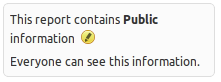

Launchpad beta testers are seeing information types on bug reports. Launchpad replaced the private and security checkboxes with an information type chooser. The information types determine who may know about the bug.

When you report a bug, you can choose the information type that describes the bug’s content. The person who triages the bug may change the information type. Information types may also change as a part of a workflow, for example, a bug may start as Embargoed Security while the bug is being fixed, then the release manager can change the information type to Unembargoed Security after the release.

Testing in phases

In the first phase of this beta, Launchpad continues to share private information with bug supervisors and security contacts using bug subscriptions. The project maintainer may be managing hundreds of bug subscriptions to private bugs, and people are getting unwanted bug mail.

In the second phase of the beta, the project maintainer can share information types with people…the maintainer is only managing shares with a few teams and users and people are not getting unwanted bug mail.

Watch the video of information types and sharing to see the feature in use and hints of the future.

One in a million

Published by Dan Harrop-Griffiths May 16, 2012 in General

Today at some time around 3am UTC, the one millionth (1,000,000th) bug was filed in Launchpad:

https://bugs.launchpad.net/edubuntu/+bug/1000000 (congrats Stéphane Graber!)

This is a huge milestone for everyone that uses and contributes to Launchpad and serves as a great witness to all the achievements, trials and challenges we’ve faced over the past 7 years. Today’s post is made up of contributions from some of the people who’ve worked with Launchpad and on developing Launchpad itself, right from the very start, up until fairly recently, like myself.

We’d love to hear your thoughts and experiences too, so please add a comment at the end if you have a story to share.

Francis Lacoste – Launchpad Manager

“Launchpad is vast. The significant milestones reached could be quite varied. But to me, the most important ones are the ones that enabled a community to use Launchpad for new activities. Thus, the first milestone was in the very earliest days, when the Ubuntu community switched from Bugzilla to Launchpad for tracking Ubuntu bugs!

“Other important milestones were when bzr and Launchpad code hosting were fast enough to host the huge Launchpad source tree itself (back in 2007). Then in 2008, when Launchpad started using Launchpad for code reviews! Other significant milestones were when MySQL joined Launchpad and bzr also in 2008. This opened the door for other big communities to join Launchpad: drizzle and then OpenStack. Finally, more recent milestones of this sort were when we introduced source package branches and Ubuntu started importing all of their packages in bzr: https://code.launchpad.net/ubuntu

“Last year, we introduced derived distributions which is now being used to synchronize with Debian development versions.”

Matthew Revell – Launchpad Product Manager

“There’s so much in Launchpad that it’s almost impossible to settle on a particular highlight. However, PPAs stick out as something of a game-changer. Someone once said that the cool thing about apt isn’t so much apt, but actually the software archive behind it. I love that I can trust the Ubuntu archive to give me what I need in a reliable form.

“However, PPAs have helped bring greater diversity to Ubuntu by allowing anyone to build and publish their own packages in their own apt repository. With the addition of private PPAs and package branches, we have probably the best combination of centralised repos and software from elsewhere that I’ve seen in any operating system.”

Dave Walker (Daviey) – Engineering Manager, Ubuntu Server Infrastructure

“The real shining star is Launchpad bugs, the features and flexibility has really enabled the server team to deliver a quality product. It’s rich API allows ease of mashups, and easy task prioritisation.”

Graham Binns – Software Engineer, Launchpad

“Probably the most significant moment for me over the time I’ve worked on Launchpad was its open-sourcing. Suddenly, this big beast that we’d worked on for years was open to outside contributions, and that was and still is incredibly exciting to me.”

Laura Czajkowski – Launchpad Support Specialist

“I think the best thing I’ve seen in a long time on Launchpad was the set downtime and reduced downtime that happens each day. This minimises the effect for all projects hosted on launchpad an many people never even notice it down.”

J.C.Sackett – Software Engineer, Launchpad

“When I started on launchpad, the volume of bug data was a source of constant performance problems. Our 1 millionth bug is noteworthy in that we’re handling 1 million bugs better now than we were handling 500,000 then.”

Curtis Hovey – Launchpad Squad Lead

“Launchpad’s recipes rock. They allow projects to automatically publish packages created from the latest commits to their branch. Users can test the latest fixes and features hours after a developer commits the work.”

Diogo Matsubara – QA Engineer

“Personal package archives combined with source package recipes allows any Launchpad user to easily put their software into Ubuntu and this is a pretty unique feature from Launchpad.”

Tom Ellis – Premium Service Engineer

“It’s been great to see Launchpad grow and scale. A key milestone for me was seeing Launchpad move from a system that was not scaling well to one which has been a great example of continuous development and seeing the web UI improve in usability.”

(Photo by ‘bunchofpants’ on flickr, Creative Commons license)

Testing descriptions for bug status and importance

Published by Curtis Hovey May 8, 2012 in General

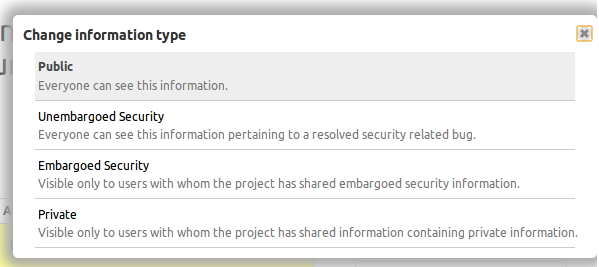

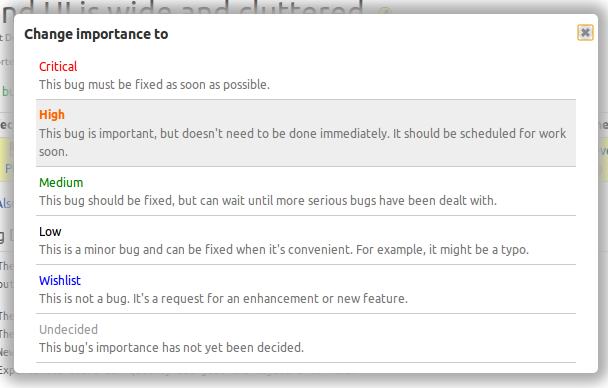

Launchpad beta testers will now see the descriptions of bug status and importance when making updates to the bug page. Launchpad pickers can now show the descriptions of the options you can choose.

Launchpad’s rules for defining a list of options you can choose have always required descriptions, but the only places you could see them were in some forms where they were listed as radio buttons. Bug status and importance where never shown as radio buttons, so their description were only know to people who read Launchpad’s source code. Users need to see the descriptions so that there is a common understanding of terms that allows us to collaborate. The original bug importance descriptions were written in 2006 and only made sense for Ubuntu bugs. We revised the descriptions for the improved picker.

There has been a lot of confusion and disagreement about the meaning of bug statuses. Since users could not see the descriptions, we posted the definition on help.launchpad.net. Separating the status description from the status title did not end the confusion. We revised the descriptions for the improved picker, but I think we need to make more changes before showing this to everyone. The picker appears to rely on colour to separate the choice title from description. Not all choices will have a special colour, and in the case of bug status there are two choices that appear to be the same grey as the description text:

The picker enhancements were made for the disclosure feature. We are changing the presentation of bug and branch privacy to work with the forthcoming project sharing enhancements. Early testing revealed that users need to know who will be permitted to see the private information when the bug is changed. This issue was similar to the long standing problem with bug status and importance. We decided to create a new picker that solved the old problem, that we could then reuse to solve the new problem.

Five kinds of branches

Published by Curtis Hovey May 7, 2012 in General

I do not like the Launchpad branch page. It is rarely informative and I would like to ignore it, but there are a few tasks that I can only perform form that page. I think the page could be better if it were possible for me to state the branches’s purpose.

I sat down the Huw, the Launchpad team’s designer to discuss the page almost two years. He was struggling to make sense of the the branch page from a developer’s perspective. At that time I concluded that I disliked the page because it treats all branches as if they have the same needs, which ignores the fact that every branch has a purpose. A branch’s purpose determines what I need to know and what actions I can take. I saw two purposes at the time, but I recently concluded in a sleepless state that there are five purposes. Maybe this was a delusion created by the fever. Let’s see if I still think that at the end of this post.

The branch page is missing:

- A statement of intent

What is the branch’s purpose? This determines the action I can perform and the information I need to see. Is this branch trunk, a supported series, for packaging, a feature, or a fix? - A record of content

I want to browse the files of some branches, while other branches I only care about the diff. - A testament of accomplishment

A branch’s purpose determines its goal. The goal of most branches is to merge with another branch. Some branched though must create releases or packages.

The features a branch needs are mostly determined by the how long the branch is expected to live:

- Short-lived branches

The branch is often based on trunk, its purpose is to fix or add a feature (Bug or blueprints), and it goal it is to merge into its base branch. - Long-lived branches

The branch is often the basis of other work. It is the sum of many merges, often summarised as milestones. The goal of the branch is to create releases.

Trunk branch

The most important branch to every project is the one designated to be trunk. This branch will live for months, or years. This is the branch I use as the basis for my changes. It is the goal of most derived branches to merge with this branch. The goal of trunk is to make releases.

There is no need to show me which bugs are fixed or blueprints (features) are implemented in trunk. Trunk is everything to the project. When we say there is a bug in a project, we really know that bug is in trunk. Launchpad could change how it models bugs to make this case clear. The bug tracker or specification tracker are the only tools that can adequately explain which bugs and blueprints were closed. I might be interested in which bugs and blueprints were recently fixed in trunk though.

The merge action does not make sense. It is the goal of other branches to merge with trunk…trunk does not merge with other branches! Well this is not entirely true in the packaging case, which I will explain shortly. I am interested is seeing the list of recently proposed merges into this branch. The branch log shows me which branches were merged.

I want Launchpad to shown me the bugs and blueprints that the project drivers plan to fix. Will my bugs be solved soon? Can I contribute? Launchpad actually can show me this on the series page. Launchpad separates the concept of planning changes (feature and fixes) from branch. There is good reason for this, but the separation of information creates a chasm that many developers fail to cross.

We commonly organise change into milestones, and each milestone may culminate in a release. A series of milestones represent continuity and compatibility. Distributions have a series without having a trunk branch. There are also projects that do not produce code, but have bugs and blueprints. Launchpad is also a registry of projects and releases that are packaged in Ubuntu.

Launchpad creates a trunk series for every registered project because we believe all projects should state their intent and distinguish between different bases of code. But where is the code? Where is my branch? I commonly see projects with series information without a branch, and I commonly see projects without series information, with tremendous branch activity. It is difficult to contribute when you cannot see what is happening and what needs to happen. Project maintainers are equally frustrated; Launchpad is not helping them to create releases from their trunk branch.

Launchpad asks me to link a branch to a series, but I think most developers want to state that their branch is the trunk series, or is a supported series. The branch is elevated to a new role and it gets the features that the role needs. I want one page about the trunk branch, not a page about planning and a page about branch metadata.

Supported series branch

A supported branch represents a previous project release. It is a historic version of trunk with its own bugs and features. Like trunk, the linked bugs and branches do not make sense, nor do I want to merge a supported branch into another branch. A supported branch can live for months or years. Some fixes (branches) will be merged into the support branch. Feature branches are never merged into supported branches.

In the case of a supported branch, I most want to know the milestones/releases that represent the life of support. I want to know which fixes in trunk will be backported to the supported branch. I might be interested in knowing the fixes (branches) that were recently merged.

Packaging release branch

A packaging branch contains additional files needed to create an installable package from a project’s release. A packaging branch often has counter-part branch, usually a supported branch, but sometimes trunk. Thus the packaging branch lives for months or years. The packaging branch may only contain the extra files to create a package using a nesting recipe, or it contains repeated merges of the counter-part branch. I am only interested is seeing the differences from the counter-part branch. I do not want to browse all files in the branch.

Packaging branches have their own set of bugs — the files introduce bugs and yet some of the patch files fix bugs in the counter-part branch. Thus we might image that the packaging branch is the sum of the bugs in the supported and packaging branch, minus the fixes provided by patches.

Packaging branches are often managed by different people from the project’s developers. Their skills and permissions are often different too. A patch to expediently fix a bug in the packaging branch might take much longer merge into the supporting branch where the bug originates.

Feature (blueprint) branch

A feature branch adds new functions to the code. It is short-lived, measured in days or weeks. The branch is based on trunk and is goal is to merge with trunk.

Feature branches need all the features that the current branch page provides. The feature branches has blueprints that specify what functions are being added and the criteria to know when the feature is done. They may also fix bugs. A recipe can be used to build an unstable package for testing.

Merges are far more complex for feature branches than is implied by the Launchpad’s UI. There are many workflows where a feature branch is actually composed of several branches that might be merged with trunk individually, or they are merged as a whole when the feature us deemed stable. Launchpad should encourage me to merge the branch into trunk, merging into another kind of branch doesn’t make sense. The UI does not properly show that I may merge my feature branch into trunk several times, or that several branches might merge into my feature branch.

I am only interested in seeing the changes from trunk. I do not want to browse all the files in the branch. Since a feature branch can be composed of many branches, I want to see the difference between the merges to understand the revision changes.

Fix (bug) branch

A Fix branch exists to close a bug. The branch is short lived, measure in hours or days. Its goal is to merge with its base branch. The older the branch gets, the more likely that it has failed.

It does not have related blueprints or recipes. I am not interest on seeing all the files and code in the branch; I only care about the changes from base.

A fix branch needs to tell me which bugs it fixes. It must encourage me to merge the fix back into the base branch.

Most fixes are based on trunk and will merge with trunk, but when a bug also affects a supported branch, Launchpad might want to encourage me to propose a backport. Launchpad currently allows me to nominate/target a bug to a series (which is ultimately a branch) when there is no fix. I think this is odd. Project development is focused on trunk, fixes are almost always made in trunk first. Once trunk has the fix, it is possible to determine the effort needed to backport it to a supported branch. If a bug is only in a supported branch, then I think it is fine for Launchpad to allow me to nominate it to be fixed, which implies the fix is already in trunk.

Conclusion

Hurray! I still think there are five kinds of branches which different needs. This issue is like a bad word processor which treats a letter, a report, and a novel the same. A good word processor knows the document’s intent and changes the UI and available features so that I can complete my task without distraction. The current branch page emphasizes what all branches have in common, but it needs to emphasise the differences so that I know what I need to do. There are no plans to change the branch UI, but contributors are welcome to improve Lp. I hope my thoughts will contribute to a proper analysis of features the branch page must support.

Launchpad Clinic at UDS-Q

Published by Laura czajkowski April 30, 2012 in Meet the devs

Let’s face it. Launchpad is not without its bugs. Everyone probably has at least one that they’d like to see fixed, and most of those that do also have the ability to fix them, if only they could get a leg-up on the learning curve of Launchpad development.

To that end, Graham Binns and I will be running two Launchpad Development Clinics at UDS-Q. We’ll be there to help you get on the road to getting your bugs fixed, and to offer advice on every step of the Launchpad development process. We’ll have EC2 instances ready for you to develop on, so if you haven’t already gone through the process of setting up local Launchpad development on your machine, you don’t need to worry.

I have created a wiki page on which you should register if you’re going to be attending either of the clinics. Just list your name and the ID of the bug(s) you want to work on on that page. We’ll check the bugs out and get in touch with you if we think they’re too big to work on in the clinics – in which case we’ll try and work with you to get them fixed over a longer period. We’ve added the event to summit schedule, for Tuesday and Thursday of UDS so why not sign up and come along!

I’m looking forward to hearing from you all, and to helping you make Launchpad awesome(r).

Contributing to Launchpad

Published by Laura czajkowski April 26, 2012 in General, Meet the devs

Ever wished there was just one feature you’d like to see enabled in Launchpad. Ever wondered when one of the bugs you have taken the time to report and document would be implemented. Well how about we help you to get these bugs fixed. Launchpad is a free and open source project, its platform is also open and developed in a transparent fashion. The source code for every feature, change and enhancement can be obtained and reviewed.

This means you can actively get involved in improving it and the community of Ubuntu platform developers is always interested in helping peers getting started. The Launchpad development team are continuously working on areas in Launchpad and other projects but there just isn’t enough time in the day to get every bug fixed, tested, deployed and out there. What we’d like to help to do is encourage and help users get more involved. We would like to get more of the developer community involved in Launchpad, users who’ve never developed before to experienced hands on developers and show you how to get started. There is documentation written up, but we’re going to explain it a less scary way in basic steps.

Getting Started

First things first, you need bzr. All of Launchpad’s branches use bzr for source control, so you’ll need to install it using apt-get:

$ apt-get install bzr

Next, you need to create a space in which to do your development work. We’ll call it ~/launchpad, but you can put it pretty much anywhere you like on your system.

$ mkdir ~/launchpad

Because getting all the ducks in a row to make it possible to do Launchpad work takes a while, we’ve actually got a script that does all the legwork for you. It’s called rocketfuel-setup (see what we did there?) and once you’ve got bzr installed, you can

$ cd ~/launchpad $ bzr --no-plugins cat http://bazaar.launchpad.net/~launchpad-pqm/launchpad/devel/utilities/rocketfuel-setup > rocketfuel-setup $ chmod a+x rocketfuel-setup $ ./rocketfuel-setup

You’ll be prompted for various details, such as your Launchpad username, and you’ll be asked for your password so that rocketfuel-setup can install all the packages that Launchpad needs. After this, you might as well go and have a sandwich, because downloading and building the source code will take at least 40 minutes on a first run.

Once rocketfuel-setup has completed on your machine, your ~launchpad directory will look something like this:

$ ls ~/launchpad/ lp-branches lp-sourcedeps rocketfuel-setup

The only directory you need to worry about in there for now is lp-branches. As the name suggests, this is where your Launchpad branches are stored.

Now that you’re all set up, it’s time to find a bug to fix and get some coding done!

Where to Begin

Now, it’s fair to say that there are plenty of bugs in Launchpad worth fixing. However, it’s even fairer to say that you don’t want to pick on of the Critical bugs as your first effort for Launchpad development, mostly because the Critical bugs are all fairly complex.

Luckily, the Launchpad team maintains a list of trivial bugs – the ones with simple, one-or-two line fixes that we just haven’t been able to get around to yet. You can find it here on Launchpad.

Once you’ve found a bug you want to fix, you need to create a branch for it. There are some utility scripts to make your life a bit easier here. In this case, rocketfuel-branch, which creates a new Launchpad branch from the devel branch that rocketfuel-setup created.

$ rocketfuel-branch my-branch-for-bug-12345

You’ll now find that branch in ~launchpad/lp-branches

$ ls ~/launchpad/lp-branches devel my-branch-for-bug-12345 $ cd ~/launchpad/lp-branches/my-branch-for-bug-12345

Now that you’ve got a branch to work in, you can start hacking! Before you do, though, you should talk to someone in #launchpad-dev on Freenode about the problem you’re trying to solve. They will help you figure out how best to solve the problem and how best to write tests for your solution. We call this the “pre-implementation call” (though it can happen on IRC) and it’s very important, especially for first time contributors.

Once you’ve had your pre-imp call, you can get coding. Don’t forget that the people in #launchpad-dev are there to help you as you go. Don’t worry if you get stuck; Launchpad is a very large project and even seasoned developers get lost in the undergrowth from time to time. Thankfully, there’s a team of committed people who are able to wade in with machetes and rescue them. I’m going to stop this analogy now, since it’s starting to wither.

What to do once you’re written code

Once you’ve finished hacking on your branch, you need to get it reviewed. Launchpad has a feature that makes this really, really simple, called Merge Proposals. Here’s how it works.

First, commit your changes to your branch (you should have been doing this all along anyway, but just in case…) and push them to Launchpad:

$ bzr ci -m "Here are some changes that I made earlier, with a useful commit message." $ bzr push Using saved push location: lp:~yourname/launchpad/my-branch-for-bug-12345 Using default stacking branch /+branch-id/24637 at chroot-83246544:///~yourname/launchpad/ Created new stacked branch referring to /+branch-id/24637.

You can now view the branch on Launchpad by using the bzr lp-open command:

$ bzr lp-open Opening https://code.launchpad.net/~yourname/launchpad/my-branch-for-bug-12345 in web browser

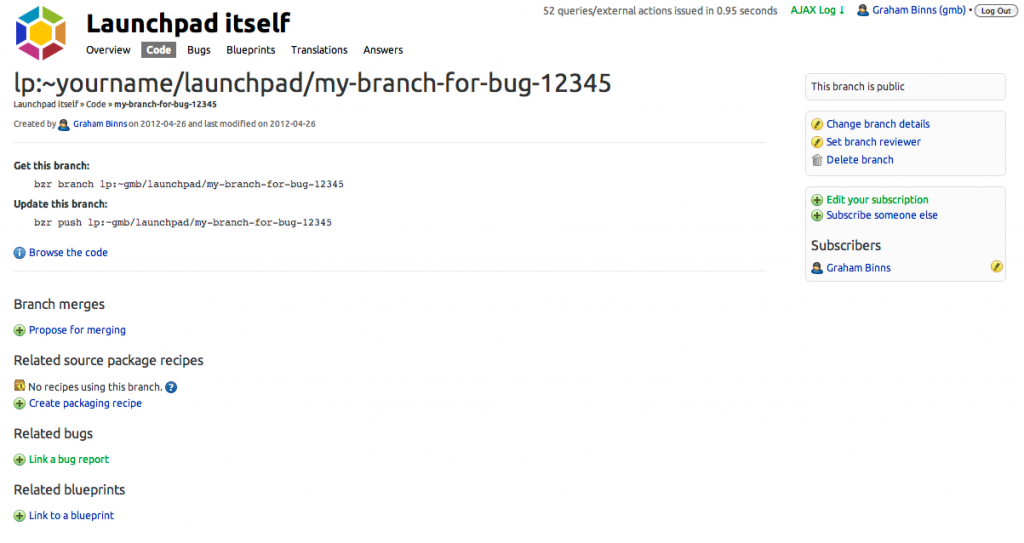

The page that will open in your browser contains a summary of the branch you’ve pushed, and will look something like this:

You can link it to the bug you’re fixing by clicking “Link a bug report”. If you’ve named your branch something like “foo-bug-12345”, Launchpad will guess that you want to link it to bug 12345 in order to save you some time.

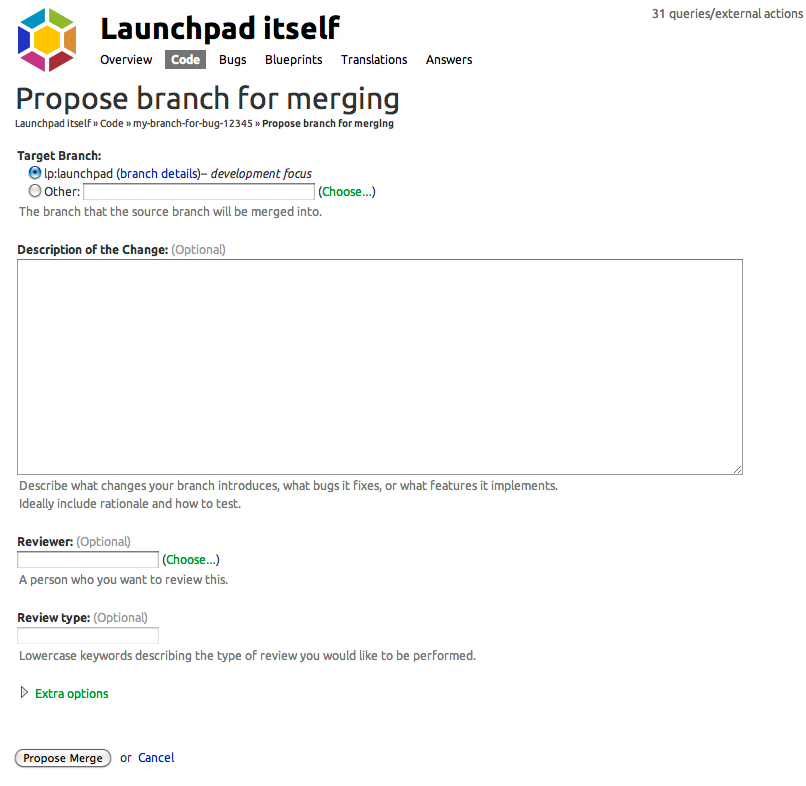

From the branch summary page, you need to create a merge proposal. To do this, click “Propose for merging.” You’ll be presented with this page:

On this page, you’re going to explain what you’ve changed and why. There’s a template for what we refer to as the merge proposal cover letter on the Launchpad dev wiki.

Things you absolutely must include in your cover letter:

- A summary of the problem.

- A summary of your proposed solution.

- Details of your solution’s implementation.

- Instructions on how to test your solution and how to QA it.

Launchpad Clinic – UDS

At UDS-Q we’re going to run two day with the help of Graham Binns who will be there to help with hands on set up and walk people through their bugs they would like to work on and figure out where to start. If you’re interested in taking part in these sessions, please add your name and the bug(s) you are interested in so we can review them ahead of time, there are many to chose from that are tagged with trivial if you want to start there.

We will have a EC2 instance set up of Launchpad to maxamise the time available to work on these areas.

Setting up commercial projects quickly

Published by Curtis Hovey April 18, 2012 in Cool new stuff, PPA, Projects, Teams

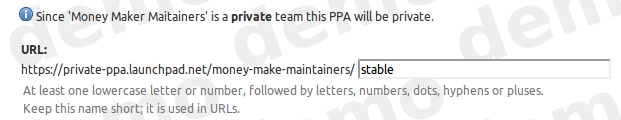

Setting up a commercial project in Launchpad has gotten easier. You can now quickly register a proprietary project and enable private bugs. You can create private teams and private personal package archives, AKA private PPA or P3A without the assistance of a Launchpad admin.

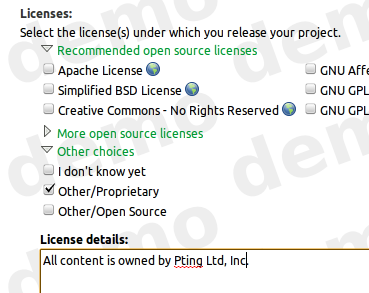

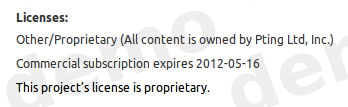

When you select the Other/Proprietary license while registering a project, or changing the project’s details,

it is given a complimentary 30-day commercial subscription.

The delay between the moment when a commercial project was registered and when the commercial subscription was purchased and then applied to the project caused a lot of confusion. During this delay, proprietary data could be disclosed. We chose to award the project with a short term commercial subscription which enabled the project to be properly configured while the 12-month commercial subscription was being purchased and applied to the project.

Any project with a commercial subscription can enable

- Default private bugs

- Once enabled by configuring the project’s bug tracker, all new reported bugs are private. You can choose to make the report public.

- Default private branches

- You can request a Launchpad admin to configure private branches for your teams. (You will be able to do this yourself in the near future when projects gain proprietary branches.)

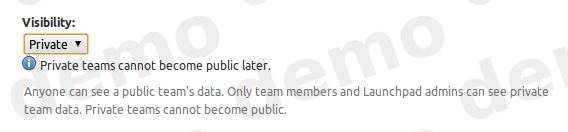

As the maintainer of a project with a commercial subscription, you can register

- Private teams

- When you register a team, you can choose to set the team visibility to private. The team’s members and data is hidden from non-members.

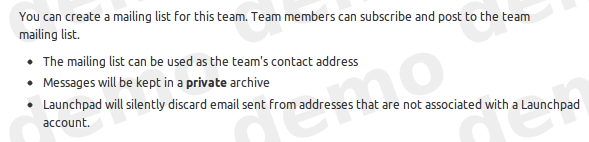

- Private mailing lists

- When you create a mailing list for a private team, the archive is also private. Only team members may see the messages in the archive.

- Private PPAs

- When you create a PPA for your public team, you may choose to make it private; private teams can only have private PPAs. You can subscribe users to your archive so that they may install packages without revealing all your team’s members and data to the subscriber.

A secondary benefit of this change is that you can now try Launchpad’s commercial features before purchasing a 12-month commercial subscription. The features will be disabled at the end of 30-days. Your test data will remain private to ensure your data is not disclosed.

Any open source project may also have a commercial subscription to enable commercial features. You can purchase a commercial subscription at the Canonical store. Commercial subscriptions cost US$250/year/project + applicable V.A.T.

(Photo by Fred Dawson on flickr, creative commons license)

A tale of two travesties

Published by Curtis Hovey April 16, 2012 in General

I have been looking for an easy and reliable way to develop and test Launchpad with Internet Explorer. Neither of the two common approaches used by Ubuntu users allows a Launchpad developer to easily verify that a change works with Internet Explorer. The challenge is to install a working version of Internet Explorer 8 and browse the local development instance of Launchpad. Maybe you can help me find a way to do this?

While Internet Explorer only represents 4% of Launchpad users, recent changes to make Launchpad easier to use made many tasks for IE users impossible to complete. This was unintended. It is a regression, and we treat all regressions as critical issues. The Purple squad reviewed the code and found that a lot of Launchpad JavaScript is disabled for all versions of Internet Explorer without an explanation nor fall-back behaviour. There is no documented way to ensure Launchpad works with Internet Explore, so most developers do not try to make it work.

Microsoft’s developer image of Windows 7 + IE8

Microsoft provides many images of Windows and IE so that Web developers can ensure their code works. The images are compatible with VirtualBox. The installation of the required software is easy, though configuration is tricky. The greatest challenge is creating a reusable Apache2 config for the many Launchpad domains.

Microsoft provides VPC compatible IE images to anyone who wants to verify that a website works with a specific version of IE. You will need about 13GB of disk space to install and run this bloatware that we all have come to expect with Windows. I installed the needed software using these commands:

$ cd /path/to/lots/of/disk/space/VirtualBox/HardDrives

$ sudo apt-get install virtualbox unrar curl

$ curl -L -O "http://download.microsoft.com/download/B/7/2/B72085AE-0F04-4C6F-9182-BF1EE90F5273/Windows_7_IE8.part0{1.exe,2.rar,3.rar,4.rar}"

$ unrar e Windows_7_IE8.part01.exe

I started VirtualBox and changed the preferences to use the path where I had lots of disk space. I created a new Windows 7 instance using the virtual disk. The disk had to be mounted as IDE (SATA failed) and the network was set as bridged.

IE worked perfectly with Ubuntu’s SSO. I could use Launchpad as I expected. In the cases where I expected a problem, I could see behaviours that confirmed my suspicion about the nature of the defect. This setup is ideal for verifying changes on Launchpad’s qastaging and production servers.

Making this setup work with the Launchpad development instance was very difficult. I hard-coded IP addresses to ensure that Windows and Apache knew the *.launchpad.dev sites. My IP address on my local network was 192.168.1.7 at that moment.

- Windows: Added

c:\windows\system32\drivers\etc\hosts

which contained

192.168.1.7 testopenid.dev launchpad.dev code.launchpad.dev answers.launchpad.dev blueprints.launchpad.dev bugs.launchpad.dev translations.launchpad.dev - Ubuntu: Copied

/etc/apache2/ssl/launchpad.crt

to a device that I could mount in Windows [1] - Windows: Added the launchpad SSL certificate to the Trusted root certificate store

Internet Options → Content → Certificates → Trusted Root Certification Authorities - Ubuntu: Updated all references in

/etc/apache2/sites-enabled/local-launchpad

of

127.0.0.88

to

192.168.1.7 - Ubuntu: Added

Allow from 192.168.1.*

after all occurrences of

Allow from localhost 127.0.0.0/255.0.0.0

I could login but I discovered that I occasionally needed to rearrange the entries in the Windows hosts file because it has a character limit! Every time my computer’s IP address changes, I need to update the Windows hosts file and the Ubuntu Apache config. I do not mind editing my IP in the Windows host file, but I do not like editing the entries or updating the Apache config. I doubt this setup works for developers using LXCs or other VMs to run Launchpad. I am planning to switch to LXC when Precise is released so this solution may not work for me in a few weeks.

Wine + Winetricks + IE8

Winetricks is a tool that can install and configure IE8 so that it runs on Ubuntu. Installation is very easy, but the UI’s controls and menus are broken. The greatest challenge is that the security behaviour is different from real Windows and IE — the browser thinks real Ubuntu SSO and the entire Launchpad development site is insecure so does not send the required REFERER header.

I installed the needed software using these commands:

$ sudo apt-get install wine winetricks

$ winetricks corefonts ie8

Configure Wine to work with launchpad development instance:

- Add the Launchpad SSL certificate to Wine [1]

$ wine control

Internet Settings → Content → Certificates → Trusted Root Certification Authorities - Run IE

$ wine iexplore

The UI does not look like IE8, and the broken buttons and menus certainly do not lend any confidence that this works. I can see from the Apache log that my request to https://launchpad.dev/claims to be MSIE 8. I cannot login to the website though. I can see in the logs that the REFERER header was not sent, so the post was rejected. The same is true for posting to the real Ubuntu SSO and Launchpad website — all posted forms fail. IE does this when it believes the browser is posting across a security boundary; it is protecting the user. Since the real Windows 7 + IE8 does not see either the development or production instance as unsecure, I know that something is misconfigured in Wine. I believe the SSL was install correctly because the real IE8 accepted it as did my Ubuntu browsers.

I can make the Launchpad development instance work with the broken Wine IE8 by hacking lp.services.webapp.publication to not requiring the REFERER header for MSIE 8 when the launchpad instance’s host end with “.dev”. This permits me to verify that JavaScript works, but is it really verifying that real IE8 works? I have no confidence in this hack. What else is broken in the setup?

[1] The default Launchpad SSL certificate in the Launchpad tree that is installed by rocketfuel-setup is incomplete. I generated a certificate that properly specified the sites it was for. I installed the better certificate in /etc/apache2/ssl and in my local OpenSSL store. The immediate benefit is that all of my development browsers accept the SSL certificate; not warning about wrong domains. I will add the better certificate to the Launchpad development tree and the script I used to generate and install it.

An introduction to our new sharing feature

Published by Curtis Hovey April 13, 2012 in Coming changes, Cool new stuff, Projects

Launchpad can now show you all the people that your project is sharing private bugs and branches with. This new sharing feature is a few weeks away from being in beta, but the UI is informative, so we’re enabling this feature for members of the Launchpad Beta Testers team now. If you’d like to join, click on the ‘join’ link on the team page.

What you’ll see

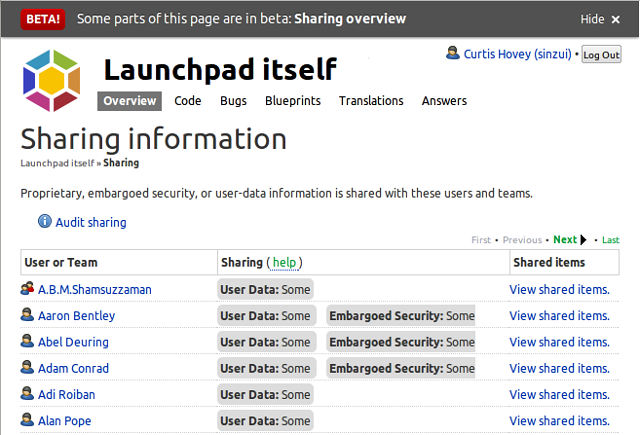

Project maintainers and drivers can see all the users that are subscribed to private bugs and branches. The listing might be surprising, maybe even daunting. You may see people who no longer contribute to the project, or people you do not know at all. The listing of users and teams illustrates why we are creating a new way of sharing project information without managing bug and branch subscriptions.

If you’re a member of (or once you’re a member of, if we want people to join) the Launchpad Beta Testers team, you can find the Sharing link on the front page of your project. I cannot see who your project is sharing with, nor can you see who my projects are sharing with, but I will use the Launchpad project as an example to explain what the Launchpad team is seeing.

The Launchpad project

The Launchpad project is sharing private bugs and branches with 250 users and teams. This is the first time Launchpad has ever provided this information. It was impossible to audit a project to ensure confidential information is not disclosed to untrusted parties. I still do not know how many private bugs and branches the Launchpad project has, nor do I even know how many of these are shared with me. Maybe Launchpad will provide this information in the future.

Former developers still have access

I see about 30 former Launchpad and Canonical developers still have access to private bugs and branches. I do not think we should be sharing this information with them. I’m pretty sure they do not want to notified about these bugs and branches either. I suspect Launchpad is wasting bandwidth by sending emails to dead addresses.

Unknown users

I see about 100 users that I do not know. I believe they reported bugs that were marked private. Some may have been subscribed by users who were already subscribed to the bug. I can investigate the users and see the specific bug and branches that are shared with them.

The majority

The majority of users and teams that the Launchpad project is sharing with are members of either the Launchpad team or the Canonical team. I am not interested in investigating these people. I do not want to be managing their individual bug and branch subscriptions to ensure they have access to the information that they need to do their jobs. Soon I won’t have to think about this issue, nor will I see them listed on this page.

Next steps — sharing ‘All information’

In a few weeks I will share the Launchpad project’s private information with both the Launchpad team and the Canonical team. It takes seconds to do, and about 130 rows of listed users will be replaced with just two rows stating that ‘All information’ is shared with the Launchpad and Canonical teams. I will then stop sharing private information with all the former Launchpad and Canonical employees.

Looking into access via bug and branch subscriptions

Then I will investigate the users who have exceptional access via bug and branch subscriptions. I may stop sharing information with half of them because either they do not need to know about it, or the information should be public.

Bugs and private bugs

I could start investigating which bugs are shared with users now, but I happen to know that there are 29 bugs that the Launchpad team cannot see because they are not subscribed to the private bug. There are hundreds of private bugs in Launchpad that cannot be fixed because the people who can fix them were never subscribed. This will be moot once all private information in the Launchpad project is shared with the Launchpad team.

Unsubscribing users from bugs

Launchpad does not currently let me unsubscribe users from bugs. When project maintainers discover confidential information is disclosed to untrusted users, they ask the Launchpad Admins to unsubscribe the user. There are not enough hours in the day to for the Admins to do this. Just as Launchpad will let me share all information with a team or user, I will also be able to stop sharing.

(Image by loop_oh on flickr, creative commons license)