One in a million

Wednesday, May 16th, 2012

Today at some time around 3am UTC, the one millionth (1,000,000th) bug was filed in Launchpad:

https://bugs.launchpad.net/edubuntu/+bug/1000000 (congrats Stéphane Graber!)

This is a huge milestone for everyone that uses and contributes to Launchpad and serves as a great witness to all the achievements, trials and challenges we’ve faced over the past 7 years. Today’s post is made up of contributions from some of the people who’ve worked with Launchpad and on developing Launchpad itself, right from the very start, up until fairly recently, like myself.

We’d love to hear your thoughts and experiences too, so please add a comment at the end if you have a story to share.

Francis Lacoste – Launchpad Manager

“Launchpad is vast. The significant milestones reached could be quite varied. But to me, the most important ones are the ones that enabled a community to use Launchpad for new activities. Thus, the first milestone was in the very earliest days, when the Ubuntu community switched from Bugzilla to Launchpad for tracking Ubuntu bugs!

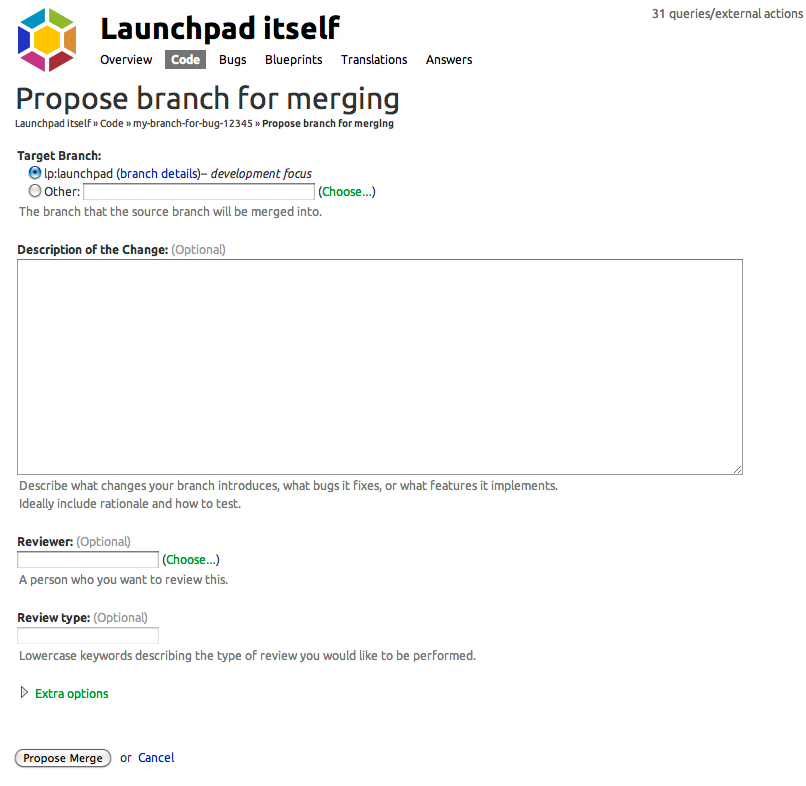

“Other important milestones were when bzr and Launchpad code hosting were fast enough to host the huge Launchpad source tree itself (back in 2007). Then in 2008, when Launchpad started using Launchpad for code reviews! Other significant milestones were when MySQL joined Launchpad and bzr also in 2008. This opened the door for other big communities to join Launchpad: drizzle and then OpenStack. Finally, more recent milestones of this sort were when we introduced source package branches and Ubuntu started importing all of their packages in bzr: https://code.launchpad.net/ubuntu

“Last year, we introduced derived distributions which is now being used to synchronize with Debian development versions.”

Matthew Revell – Launchpad Product Manager

“There’s so much in Launchpad that it’s almost impossible to settle on a particular highlight. However, PPAs stick out as something of a game-changer. Someone once said that the cool thing about apt isn’t so much apt, but actually the software archive behind it. I love that I can trust the Ubuntu archive to give me what I need in a reliable form.

“However, PPAs have helped bring greater diversity to Ubuntu by allowing anyone to build and publish their own packages in their own apt repository. With the addition of private PPAs and package branches, we have probably the best combination of centralised repos and software from elsewhere that I’ve seen in any operating system.”

Dave Walker (Daviey) – Engineering Manager, Ubuntu Server Infrastructure

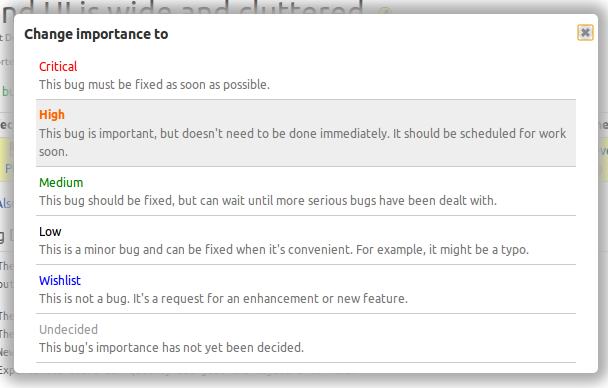

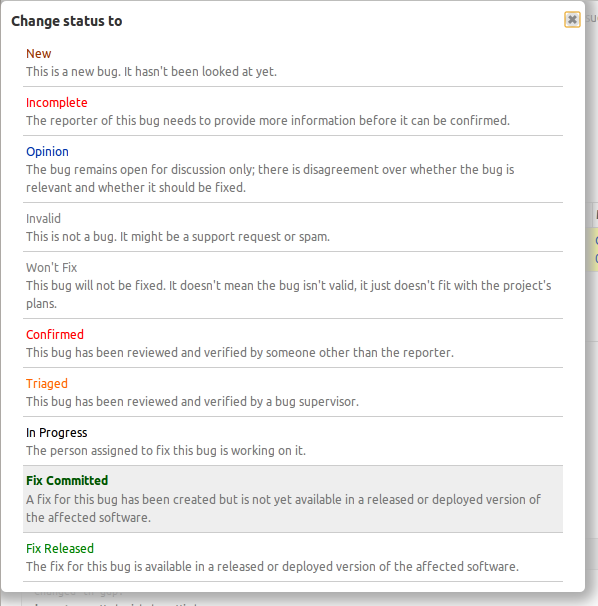

“The real shining star is Launchpad bugs, the features and flexibility has really enabled the server team to deliver a quality product. It’s rich API allows ease of mashups, and easy task prioritisation.”

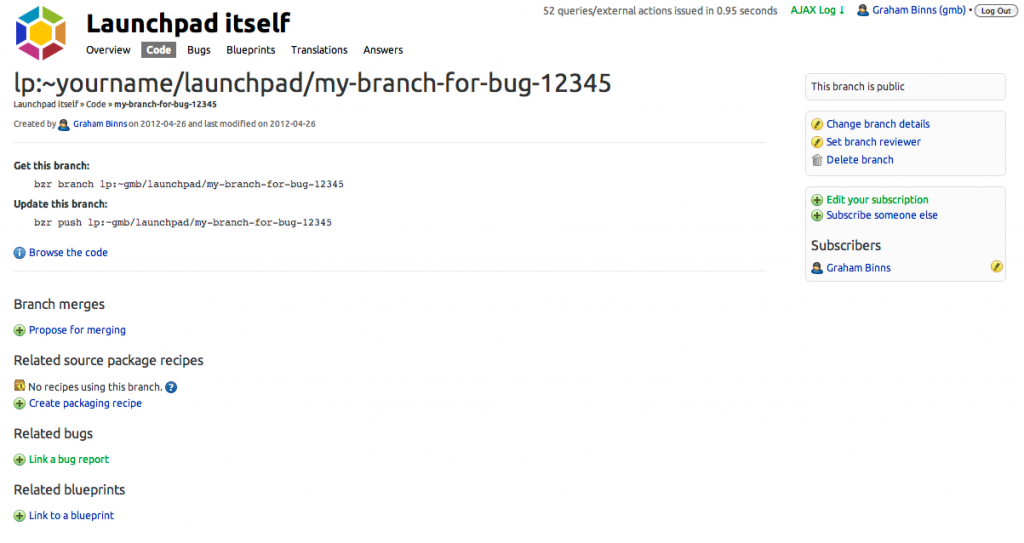

Graham Binns – Software Engineer, Launchpad

“Probably the most significant moment for me over the time I’ve worked on Launchpad was its open-sourcing. Suddenly, this big beast that we’d worked on for years was open to outside contributions, and that was and still is incredibly exciting to me.”

Laura Czajkowski – Launchpad Support Specialist

“I think the best thing I’ve seen in a long time on Launchpad was the set downtime and reduced downtime that happens each day. This minimises the effect for all projects hosted on launchpad an many people never even notice it down.”

J.C.Sackett – Software Engineer, Launchpad

“When I started on launchpad, the volume of bug data was a source of constant performance problems. Our 1 millionth bug is noteworthy in that we’re handling 1 million bugs better now than we were handling 500,000 then.”

Curtis Hovey – Launchpad Squad Lead

“Launchpad’s recipes rock. They allow projects to automatically publish packages created from the latest commits to their branch. Users can test the latest fixes and features hours after a developer commits the work.”

Diogo Matsubara – QA Engineer

“Personal package archives combined with source package recipes allows any Launchpad user to easily put their software into Ubuntu and this is a pretty unique feature from Launchpad.”

Tom Ellis – Premium Service Engineer

“It’s been great to see Launchpad grow and scale. A key milestone for me was seeing Launchpad move from a system that was not scaling well to one which has been a great example of continuous development and seeing the web UI improve in usability.”

(Photo by ‘bunchofpants’ on flickr, Creative Commons license)

Dan: What’s your role on the Launchpad team?

Dan: What’s your role on the Launchpad team?